Raccoon Image Detection using Keras

Overview

This post is about identifying raccoon in an input image.

We will collect raccoon images and find the cordinates in the images where our image object can be identified.

This techinique is termed ad Image Annotation.

For sake of simplicity we will follow bounding box annotation.

Here we will have location of the image identified in (x1, y1) & (x2, y2) cordinates. For each Image we will map images and its cordinates.

Later using standard machine learning steps using keras we will train and predict result for an unknown image.

Solution

1## Installation

2!pip install netron

3!pip install keras_sequential_ascii

1

2import tensorflow as tf

3from tensorflow.keras.models import Sequential, load_model

4from keras.preprocessing.image import ImageDataGenerator, array_to_img, img_to_array, load_img

5from tensorflow.keras.layers import Dense, Reshape, Flatten, Dropout, Activation, BatchNormalization

6from tensorflow.keras.optimizers import SGD, Adam

7from tensorflow.keras.callbacks import History

8from keras.callbacks.callbacks import ModelCheckpoint

9

10

11print("Num GPUs Available: ", len(tf.config.experimental.list_physical_devices('GPU')))

12tf.config.experimental.list_physical_devices('GPU')

13

14gpus = tf.config.experimental.list_physical_devices('GPU')

15if gpus:

16 # Restrict TensorFlow to only allocate 1GB of memory on the first GPU

17 try:

18 tf.config.experimental.set_virtual_device_configuration(

19 gpus[0],

20 [tf.config.experimental.VirtualDeviceConfiguration(memory_limit=2048)])

21 logical_gpus = tf.config.experimental.list_logical_devices('GPU')

22 print(len(gpus), "Physical GPUs,", len(logical_gpus), "Logical GPUs")

23 except RuntimeError as e:

24 # Virtual devices must be set before GPUs have been initialized

25 print(e)

Num GPUs Available: 1

1 Physical GPUs, 1 Logical GPUs

1### Imports

2

3import numpy as np

4import pandas as pd

5

6from keras import Model

7from keras.applications.mobilenet import MobileNet, preprocess_input

8from keras.callbacks import ModelCheckpoint, EarlyStopping, ReduceLROnPlateau, Callback

9from keras.layers import Conv2D, Reshape

10from keras.utils import Sequence

11from keras.backend import epsilon

12import tensorflow as tf

13

14import os

15

16%matplotlib inline

17import matplotlib.pyplot as plt

18

19from PIL import Image

20from matplotlib.patches import Rectangle

21

22import csv

23

24from keras_sequential_ascii import keras2ascii

1## Constants

2

3IMAGE_SIZE = 128

4

5CHANNELS = 3

6

7IMAGE_PATH = '/home/ashish/ai_ml/object_detection_dataset/raccoon_dataset-master/images'

8

9TRAIN_LABELS = 'train_labels.csv'

10

11PATIENCE = 10

1# Loading data

2train_df = pd.read_csv(TRAIN_LABELS)

3

4train_df.shape

(173, 8)

1train_df.head()

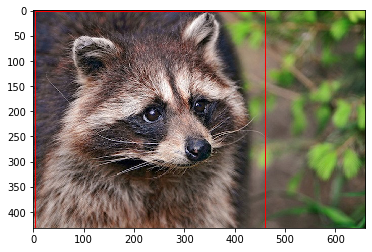

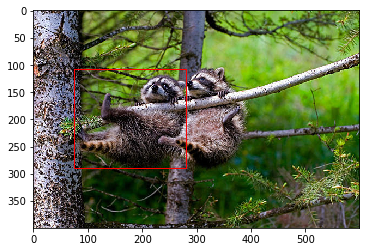

1## Images

2

3racoons = [IMAGE_PATH + "/" + f for f in os.listdir(IMAGE_PATH)]

4

5print('total raccoon images {}'.format(len(racoons)))

total raccoon images 200

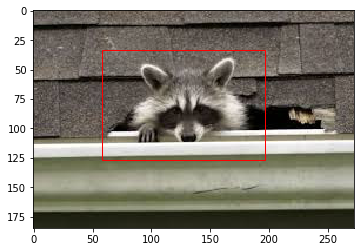

1

2limit = int((len(train_df.index)/25))

3print(limit)

4

5for index in range(limit):

6 filename = IMAGE_PATH + "/" + (train_df['filename'][index])

7 width = train_df['width'][index]

8 height = train_df['height'][index]

9 xmin = train_df['xmin'][index]

10 ymin = train_df['ymin'][index]

11 xmax = train_df['xmax'][index]

12 ymax = train_df['ymax'][index]

13 imageObject = Image.open(filename)

14 print(filename)

15 print("W X H {}".format(imageObject.size))

16 #show Image object

17 plt.imshow(imageObject)

18 rect = Rectangle((xmin,ymin),xmax-xmin,ymax-ymin,linewidth=1,edgecolor='r',facecolor='none')

19 ax = plt.gca()

20 ax.add_patch(rect)

21 plt.show()

22

6

/home/ashish/ai_ml/object_detection_dataset/raccoon_dataset-master/images/raccoon-17.jpg

W X H (259, 194)

/home/ashish/ai_ml/object_detection_dataset/raccoon_dataset-master/images/raccoon-11.jpg

W X H (660, 432)

/home/ashish/ai_ml/object_detection_dataset/raccoon_dataset-master/images/raccoon-63.jpg

W X H (600, 400)

/home/ashish/ai_ml/object_detection_dataset/raccoon_dataset-master/images/raccoon-63.jpg

W X H (600, 400)

/home/ashish/ai_ml/object_detection_dataset/raccoon_dataset-master/images/raccoon-60.jpg

W X H (273, 185)

/home/ashish/ai_ml/object_detection_dataset/raccoon_dataset-master/images/raccoon-69.jpg

W X H (205, 246)

1## Readinf csv file

2

3with open(TRAIN_LABELS) as csvFile:

4 paths = []

5 coords = np.zeros((sum(1 for line in csvFile) - 1, 4))

6# print(coords)

7 csvReader = csv.reader(csvFile, delimiter=",")

8 print(csvReader)

9 csvFile.seek(0)

10 next(csvReader, None)

11 for i, row in enumerate(csvReader):

12# print('****************')

13# print(row)

14 for j, r in enumerate(row):

15 if((j!= 0) & (j!=3)):

16 # Parse width, height, xmin, xmax, ymin, ymax to int and store in cordinates for each

17 row[j] = int(r)

18

19 path, width, height, class_, xmin, ymin, xmax, ymax = row

20 path = IMAGE_PATH +"/" + path

21 paths.append(path)

22 # Resize bounding box for training images as we have rescaled image to IMAGE_SIZE = 128

23 coords[i, 0] = xmin * IMAGE_SIZE / width

24 coords[i, 1] = ymin * IMAGE_SIZE / height

25 coords[i, 2] = xmax * IMAGE_SIZE / width

26 coords[i, 3] = ymax * IMAGE_SIZE / height

27

28

29print(len(paths))

30print(coords.shape)

<_csv.reader object at 0x7f2edc553250>

173

(173, 4)

1### Generate image batches

2# Create 3 dimensional array for each image holding 0.0 values

3batch_images = np.zeros((len(paths), IMAGE_SIZE, IMAGE_SIZE, 3), dtype=np.float32)

4# print(batch_images[1])

5

6for i, path in enumerate(paths):

7 img = Image.open(path)

8 img = img.resize((IMAGE_SIZE, IMAGE_SIZE))

9 img = img.convert('RGB')

10 imgArray = np.array(img, dtype=np.float32)

11 imgArrayNormalised = preprocess_input(imgArray)

12 batch_images[i] = imgArrayNormalised

13

14print(batch_images.shape)

(173, 128, 128, 3)

1### Mobile net solution

2

3ALPHA = 1.0

4input_shape = (IMAGE_SIZE, IMAGE_SIZE, CHANNELS)

5model = MobileNet(input_shape = input_shape, include_top = False, alpha = ALPHA)

6

7# include_top = False => do not include classification layer

8# alpha = 0..1 => Load Weight

9

10# Freeze All layers

11for layer in model.layers:

12# print(layer.name)

13 layer.trainable = False

14

15keras2ascii(model)

1## Adding new Coordinate layer on top of existing layer

2

3# Add new convlution layer on top of existing model

4print("Adding new layers now*********")

5x = model.layers[-1].output

6# Add new top layer which is a conv layer of the same size as the previous layer so that only 4 coords of BBox can be output

7# In the line below kernel size should be 3 for img size 96, 4 for img size 128, 5 for img size 160 etc.

8x = Conv2D(4, kernel_size=4, name='coords')(x)

9# These are the 4 predicted coordinates of one BBox

10x = Reshape((4, ))(x)

11

12

13model = Model(inputs=model.input, outputs = x)

14keras2ascii(model)

1### Define a custom loss function IoU which calculates Intersection Over Union

2from keras import backend as K

3

4def loss(gt,pred):

5 intersections = 0

6 unions = 0

7 diff_width = np.minimum(gt[:,0] + gt[:,2], pred[:,0] + pred[:,2]) - np.maximum(gt[:,0], pred[:,0])

8 diff_height = np.minimum(gt[:,1] + gt[:,3], pred[:,1] + pred[:,3]) - np.maximum(gt[:,1], pred[:,1])

9 intersection = diff_width * diff_height

10

11 # Compute union

12 area_gt = gt[:,2] * gt[:,3]

13 area_pred = pred[:,2] * pred[:,3]

14 union = area_gt + area_pred - intersection

15

16# Compute intersection and union over multiple boxes

17 for j, _ in enumerate(union):

18 if union[j] > 0 and intersection[j] > 0 and union[j] >= intersection[j]:

19 intersections += intersection[j]

20 unions += union[j]

21

22 # Compute IOU. Use epsilon to prevent division by zero

23 iou = np.round(intersections / (unions + epsilon()), 4)

24 iou = iou.astype(np.float32)

25 return iou

26

27

28def IoU(y_true, y_pred):

29 iou = tf.py_function(loss, [y_true, y_pred], tf.float32)

30 return iou

1# Compile and configure model parameters

2

3coordinates = coords

4

5

6

7# Checkpoint best validation model

8checkpoint = ModelCheckpoint("model-{IoU:.2f}.h5", verbose=1, save_best_only=True,

9 save_weights_only=True, mode="max", period=1)

10# Stop early, if the validation error deteriorates

11stop = EarlyStopping(monitor="IoU", patience=PATIENCE, mode="max")

12

13# Reduce learning rate if Validation IOU does not improve

14reduce_lr = ReduceLROnPlateau(monitor="IoU", factor=0.2, patience=10, min_lr=1e-7, verbose=1, mode="max")

1## Fit model

2

3model.compile(optimizer='Adam', loss='mse', metrics=[IoU])

4

5model.fit(batch_images, coordinates, verbose=1, epochs=64, batch_size=24, callbacks=[stop, checkpoint, reduce_lr])

Epoch 1/64

173/173 [==============================] - 1s 6ms/step - loss: 3784.0423 - IoU: 0.1075

Epoch 2/64

72/173 [===========>..................] - ETA: 0s - loss: 763.2859 - IoU: 0.4102

/home/ashish/installed_apps/anaconda3/lib/python3.7/site-packages/keras/callbacks/callbacks.py:707: RuntimeWarning: Can save best model only with val_loss available, skipping.

'skipping.' % (self.monitor), RuntimeWarning)

173/173 [==============================] - 0s 2ms/step - loss: 527.0910 - IoU: 0.5052

Epoch 3/64

173/173 [==============================] - 0s 2ms/step - loss: 597.3788 - IoU: 0.5298

Epoch 4/64

173/173 [==============================] - 0s 2ms/step - loss: 429.7478 - IoU: 0.5772

Epoch 5/64

173/173 [==============================] - 0s 2ms/step - loss: 211.3445 - IoU: 0.6535

Epoch 6/64

173/173 [==============================] - 0s 2ms/step - loss: 215.9080 - IoU: 0.6400

Epoch 7/64

173/173 [==============================] - 0s 2ms/step - loss: 180.0469 - IoU: 0.6972

Epoch 8/64

173/173 [==============================] - 0s 2ms/step - loss: 154.7091 - IoU: 0.7018

Epoch 9/64

173/173 [==============================] - 0s 2ms/step - loss: 129.3547 - IoU: 0.7204

Epoch 10/64

173/173 [==============================] - 0s 2ms/step - loss: 122.0919 - IoU: 0.7303

Epoch 11/64

173/173 [==============================] - 0s 2ms/step - loss: 122.0040 - IoU: 0.7143

Epoch 12/64

173/173 [==============================] - 0s 2ms/step - loss: 99.1927 - IoU: 0.7627

Epoch 13/64

173/173 [==============================] - 0s 2ms/step - loss: 102.6646 - IoU: 0.7620

Epoch 14/64

173/173 [==============================] - 0s 2ms/step - loss: 91.9925 - IoU: 0.7675

Epoch 15/64

173/173 [==============================] - 0s 2ms/step - loss: 84.6869 - IoU: 0.7663

Epoch 16/64

173/173 [==============================] - 0s 2ms/step - loss: 81.3867 - IoU: 0.7627

Epoch 17/64

173/173 [==============================] - 0s 2ms/step - loss: 76.7604 - IoU: 0.7826

Epoch 18/64

173/173 [==============================] - 0s 2ms/step - loss: 84.9325 - IoU: 0.7618

Epoch 19/64

173/173 [==============================] - 0s 2ms/step - loss: 79.4098 - IoU: 0.7897

Epoch 20/64

173/173 [==============================] - 0s 2ms/step - loss: 73.0330 - IoU: 0.7986

Epoch 21/64

173/173 [==============================] - 0s 2ms/step - loss: 72.3371 - IoU: 0.7907

Epoch 22/64

173/173 [==============================] - 0s 2ms/step - loss: 67.5700 - IoU: 0.8050

Epoch 23/64

173/173 [==============================] - 0s 2ms/step - loss: 70.2793 - IoU: 0.7845

Epoch 24/64

173/173 [==============================] - 0s 2ms/step - loss: 67.5500 - IoU: 0.8021

Epoch 25/64

173/173 [==============================] - 0s 2ms/step - loss: 69.0528 - IoU: 0.7899

Epoch 26/64

173/173 [==============================] - 0s 2ms/step - loss: 64.7082 - IoU: 0.7882

Epoch 27/64

173/173 [==============================] - 0s 2ms/step - loss: 72.5488 - IoU: 0.7954

Epoch 28/64

173/173 [==============================] - 0s 2ms/step - loss: 71.5139 - IoU: 0.7848

Epoch 29/64

173/173 [==============================] - 0s 2ms/step - loss: 70.4084 - IoU: 0.7692

Epoch 30/64

173/173 [==============================] - 0s 2ms/step - loss: 70.7010 - IoU: 0.7822

Epoch 31/64

173/173 [==============================] - 0s 2ms/step - loss: 68.5914 - IoU: 0.7863

Epoch 32/64

173/173 [==============================] - 0s 2ms/step - loss: 63.1908 - IoU: 0.7989

Epoch 00032: ReduceLROnPlateau reducing learning rate to 0.00020000000949949026.

<keras.callbacks.callbacks.History at 0x7f2ea056ead0>

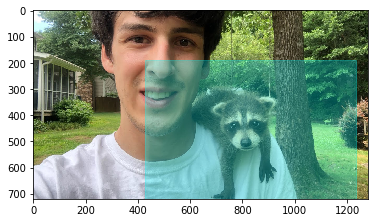

1## Pick and image and show box around face

2imageNumber = 10

3img_path = '/home/ashish/ai_ml/object_detection_dataset/raccoon_dataset-master/images/raccoon-{}.jpg'\

4.format(imageNumber)

5img_path = '/home/ashish/Downloads/raccoon_test_1.jpg'

6imageObjectOriginal = Image.open(img_path)

7imageObjectScaled = Image.open(img_path)

8imageObjectScaled = imageObjectScaled.resize((IMAGE_SIZE, IMAGE_SIZE))

9

10## Extract fearures from images for model to understand

11features_scaled = preprocess_input(np.array(imageObjectScaled, dtype=np.float32))

12print(features_scaled.shape)

13region = model.predict(x=np.array([features_scaled]))[0]

14

15print(region)

(128, 128, 3)

[ 42.84344 33.239838 123.591385 142.06334 ]

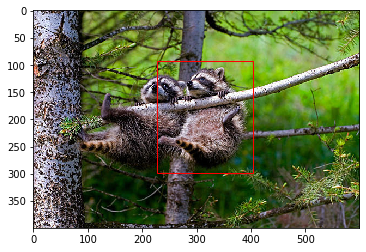

1## Draw bounding boxes for scaled and unscaled images

2

3print("Box on scaled image")

4print('Scaled width & height ({}, {})'.format(imageObjectScaled.width, imageObjectScaled.height))

5print("({}, {}) , ({}, {})".format(xmin, ymin, xmax, ymax))

6## Show box in scaled image

7plt.imshow(imageObjectScaled)

8width = IMAGE_SIZE

9height = IMAGE_SIZE

10xmin = region[0]

11ymin = region[1]

12xmax = region[2]

13ymax = region[3]

14rect = Rectangle((xmin,ymin),xmax-xmin,ymax-ymin,linewidth=1,color='cyan',facecolor='none', alpha=0.3)

15ax = plt.gca()

16ax.add_patch(rect)

17plt.show()

18

19print("Box on unscaled image")

20## Show box on unscaled image

21width, height = imageObjectOriginal.size

22print('Original width & height ({}, {})'.format(width, height))

23xmin = (region[0] * width/ IMAGE_SIZE)

24ymin = (region[1] * height/ IMAGE_SIZE)

25xmax = (region[2] * width / IMAGE_SIZE)

26ymax = (region[3] * height /IMAGE_SIZE)

27

28print("({}, {}) , ({}, {})".format(xmin, ymin, xmax, ymax))

29plt.imshow(imageObjectOriginal)

30rect = Rectangle((xmin,ymin),xmax-xmin,ymax-ymin,linewidth=1,color='cyan',facecolor='none', alpha=0.3)

31ax = plt.gca()

32ax.add_patch(rect)

33plt.show()

Box on scaled image

Scaled width & height (128, 128)

(41, 60) , (223, 155)

/home/ashish/installed_apps/anaconda3/lib/python3.7/site-packages/ipykernel_launcher.py:14: UserWarning: Setting the 'color' property will override the edgecolor or facecolor properties.

Box on unscaled image

Original width & height (1280, 720)

(428.43441009521484, 186.9740867614746) , (1235.9138488769531, 799.1062831878662)

/home/ashish/installed_apps/anaconda3/lib/python3.7/site-packages/ipykernel_launcher.py:30: UserWarning: Setting the 'color' property will override the edgecolor or facecolor properties.